I’ve been using Openfiler for a long time now, but I’m always open to alternatives. Recently I stumbled across NexentaStor, which is a software product you can load on your servers to make them into filers, like Openfiler. NexentaStor is available both in an Community Edition, as well as a relatively inexpensive Enterprise Edition, which comes with all the bells and whistles like built in block level replication.

NexentaStor is “Linux-like”, but is based on an OpenSolaris kernel, not Linux. One big advantage is of this is that OpenSolaris (and hence, NexentaStor) runs the ZFS file system. ZFS is the next generation file system, and offers all sorts of file system goodness, chief of which it’s meant to run on lots of cheap disks. No more expensive RAID arrays required for reliability.

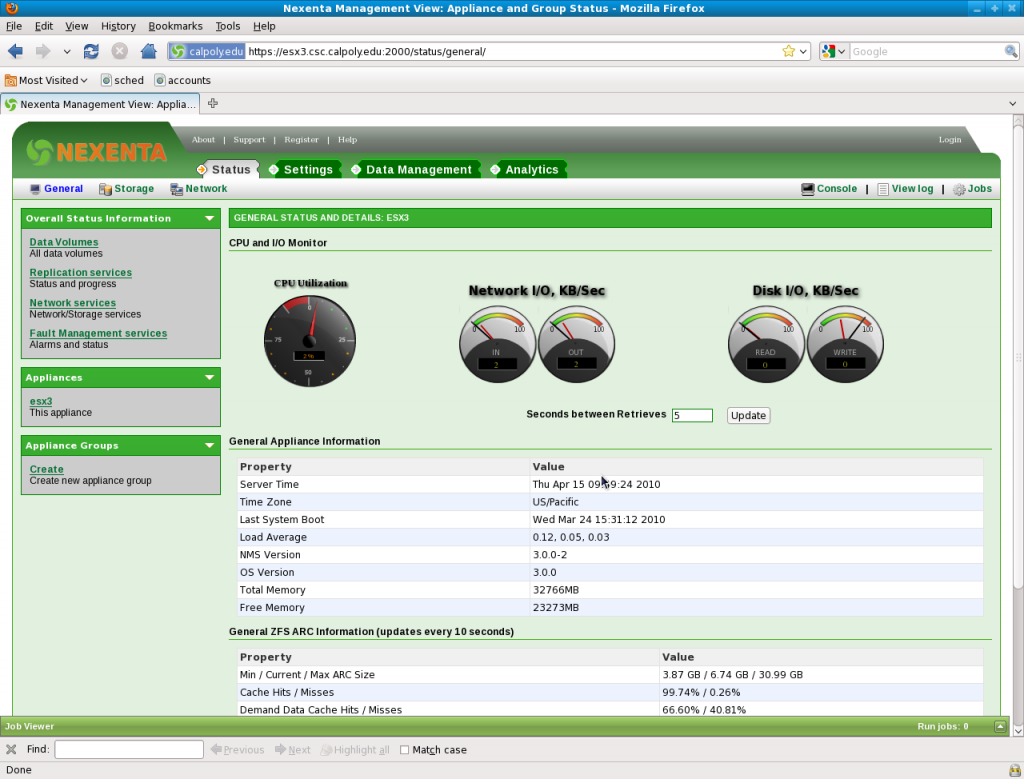

The free NexentaStor 3.0 Community Edition has been working fine for me in testing as a backend for XenServer hypervisors. It has been up and stable for a couple weeks with multiple vm’s running on it.

The Enterprise Edition offers everything the Community Edition supports *PLUS* plugins available for replication, virtualization, and more. You can easily build an “enterprise class” filer with all of the same features as the “big boys” have, for much, much less.

The NexentaStor website says:

NexentaStor 3.x Community ISO CD images can be installed on “bare-metal” x86/64 hardware and VM installed images are available below as well. The hardware compatibility lists for OpenSolaris 10.03 indicate what is supported. The NexentaStor installer also verifies hardware compatibility before installation commences. For more information on hardware compatibility please visit NexentaStor FAQ.

This is a major NexentaStor release, with many new features, improved hardware support, and many bug fixes over the older Developer Edition including:

- In-line deduplication for primary storage and backup

- Free for up to 12 TB of used storage with no limits on the total size of attached storage

- Supports easy upgrade to future Community Edition releases and to Enterprise Edition licenses

- Support for user and group quotas

- The ability to automatically expand pools

Commercial support and optional modules are not available for the Community Edition. Please click on ‘Get support’ or ‘Get enterprise’ above to learn more about the support options and additional capabilities of NexentaStor Enterprise Edition.

Recommended system requirements:

- 64 bit processor (32 bit not recommended for production)

- 768MB RAM minimum for evaluation, 2GB okay, 4GB ideal

- 2 identical relatively small disks for high-availability system folder (for operating system and the rest appliance’s software)

Hi,

Thank you for the article. I’m testing NexentaStor on self-build server with 2x2TB WD Green disks and Intel Pro/1000 PT Server NIC. I run the tests in Windows Server 2008 on XenServer 5.6. When using CIFS share, the data transfer is fluent (according to task manager) and uses the NIC very well. But when using the iSCSI, the transfer is not fluent, nor responsive. It is like: nothing nothing spike nothing nothing spike. The transfer speed of spikes is much higher than the CIFS speed but then there is delay for few seconds. Tryied turning off Writeback cache but it resulted in transfer speed of few MB/s. Have you experienced anything like this?

Hi, thanks for writing. No, I never saw anything like that. I’ve used Nexenta as an iSCSI back end for XenServer a couple of times. I ran Windows virtual machines on the XenServer. I ran iometer on the vm’s for days or weeks at a time. This would exercise the vm’s “C: drive” which was really the iSCSI presented by Nexenta. The access times, speed, etc. reported by iometer was smooth and steady. On better hardware, iometer reported faster access, but I never saw an intermittent response from iSCSI on Nexenta.

Hmm, googling a bit, seems like people are having issues with WD Green drives showing bursty behavior, see https://mattconnolly.wordpress.com/2010/06/30/western-digital-green-lemon/ or https://jmlittle.blogspot.com/2010/03/wd-caviar-green-drives-and-zfs.html or https://opensolaris.org/jive/thread.jspa?threadID=129274&tstart=225.

If you have some other make of drive laying around, try using that and see if the bursty behavior persists. If some other drive is fine, and the WD Green ones do it, then I’d say it’s a WD issue.

@Adams This problem, which also occurs with CIFS aswell is because of the number of drives you have and the amount of writeback cache the install is using. The way ZFS works is, it flushes the cache every few (around 10) seconds from system memory, in that time, no writes can occur (dont know why), if you have say 2GB in RAM, and your disks max, sequencial writes are at 500MB/s, you have 4 seconds of no writes. So to reduce this problem / eliminate it, you need to limit the write back cache size and change the flush time, if you dont increase the number of disks in the array. Look on opensolaris.org forums, I had the same problem around a year ago and got the above information there.

To be honest, I’m considering using Nexentastor for exchanging my current openfiler environmenr. I’ve read a lot about it before really implementing it. Most interesting to me are ZIL and L2ARC caching materials. Openfiler is missing this “feature”(perhaps compiling in dm-cache might do this).

But in matters of the issue described by Greg Porter, you may want to consider ZIL caching on SSD.

@greg.porter

I’m running Nexenta Core with 8 x 1T disks for round a year now and the only thing I had to replace were 2 WD-Green HD. Those disks are probably good for workstations but sure not for servers…

Get either Hitachi or Seagate and it will be peace of mind.

As general rule for ZFS:

– feed enough RAM and ZFS will love you

– Give ’em ZIL and L2ARC and it will fly

@Dan Hitachi or Seagate???

Just gets drives made for NAS. Western Digital Red drives are made specifically for NAS environments. WD Black is also a good choice. I’ve had seagate drive fail much more frequently than WD’s. We have several RIAD 5 and 5=0 servers and a HP storage server with Seagate disks failed 1 disk per month (even replacement seagate disks) until we replaced all disks with WD black.