Eager readers want to know if EqualLogic array firmware upgrades are non-disruptive, so we tested it in our mighty remote environment!

Bottom line: The firmware update process halts I/Os during cut-over from one controller to the other. This takes something like 27 seconds (26969 ms). As most operating systems have a disk timeout value of 30 seconds or longer, no one really notices.

Some background and how we tested:

If you have more than one array, and at least the largest array’s worth of free room, then the official way to do a firmware update is to use a “Maintenance Pool”. When you assign a member array to the Maintenance Pool, the volumes on that array will migrate to the other member(s) of your production pool. Depending on how much you have to move this may take a few hours or overnight. No one notices the moves. Once the member is evacuated, update it, reboot it, and put it back into the production pool, and move to the next array. We do this in production and there is indeed no interruption whatsoever, using a maintenance pool works well.

In a single member pool or if you are short of room, using a maintenance pool is not an option. We were curious how bad the interruption would be in a single member pool, so we tested it. In our test vCenter environment, we have one ESXi host connected to one dual controller PS6000 EqualLogic array. VMs live on datastores provided by the array. This is a minimalistic all-your-eggs-in-one-basket configuration.

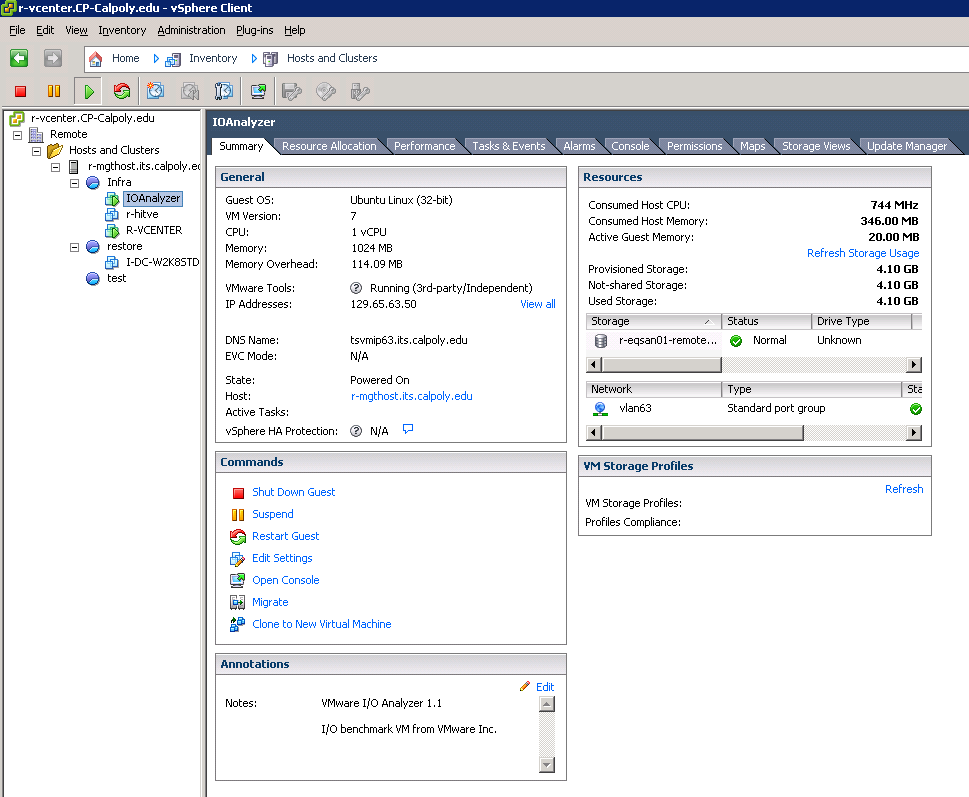

We deployed VMware’s handy I/O Analyzer, which is a simple to use appliance based on IOmeter for disk benchmarking. We installed it as the readme says.

We followed the usual EqualLogic firmware update procedure. (EqualLogic docs are locked behind their support site, which is unfortunate. If you have an active support contract you can get the docs and firmware there.) We logged into the support site and downloaded the firmware.

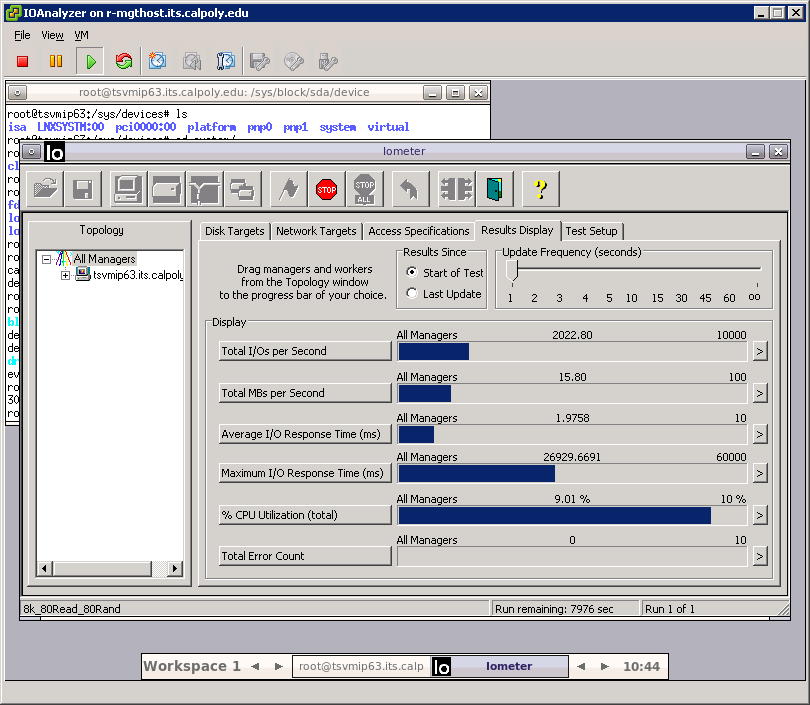

Next we started a I/O Analyzer disk benchmark run to put some I/O load against the array.

Then we ran the update procedure from the Group Manager GUI. This is basically 2 parts.

The first part just uploads and stages the firmware. This is not at all disruptive. I/O Analyzer didn’t notice anything.

Then to activate the staged firmware, you have to reboot the array. We did this from the command prompt.

The reboot happens intelligently. First it reboots the (non-active) secondary controller, and applies the update. The was-secondary, soon-to-be-primary, controller reboots with the new firmware.

Once the updated secondary comes up, the array fails over to it. This is where a small hiccup is noticeable. I/Os stopped for about 27 seconds during the cut-over (26929 ms). This is short enough that no actual grief ensued.

The now-secondary, was-primary, controller then reboots and applies the firmware.

The I/O Analyzer continued to happily chug along during the hiccup, as well as the vCenter Windows VM, both of which were active during the update. Neither seemed to notice the hiccup, neither logged anything related to disk issues.

All in all the update process was pretty painless, even with the array under load.

Hi,

Please help support the following site for the Equallogic community:

https://equallogicfirmware.wordpress.com

But it IS disruptive. The title is misleading and when I can across this title I was hoping to learn they were non-disruptive. We have Equallogic SANs and do not upgrade them as frequently as we do EMC because they are disruptive in nature. With hundreds of VMs how do I know there is not going to be an issue with a 27 second pause? 2 seconds, i agree is nothing (that is same as VM migration) but 27 seconds could become 30 or 45. Sorry but this is not good news.

We’ve had major issues with 6510E series equallogics, these take 45 seconds for even a return of pings on the scsi network. Last time we braved a live failover it killed half our vmware machines. Not recommended if you need real HA.

We have 4 equallogic array’s and I did a DR site one with one vm running this morning and actually had zero downtime to the vm. I noticed on one timeout when pinging each controller addresses during the process and the vm was never affected. Process took around 25 min.

I would never do this for my production SAN that runs the vm’s and would always shutdown and book an outage.However much like the author of this article I have seen it work with no interruption, but my I/O on one running vm’s would never compared to 100.

Call me chicken, but I would always book an outage and shutdown VM’s when doing. Would take less time then restoring from backup to have that small maintenance window.

@Dan

Since the above site is no longer available is there another place to get EQL firmware updates without active maintenance? Dell won’t warranty old units and we would like to update firmware.